Building a local and offline private desktop AI app using LLMs

I’ve been very interested in building offline and private AI applications.

If your computer can handle it, like most Apple laptops with M1 Pro and latest chips do, you can improve a lot of workflows.

Even outside of work, I often need to organize personal files, such as documents, bills, or photos. The only reliable way today to be able to use automated organization tools is to use cloud services. Unfortunately, this means that you have to upload your files to a third-party service, which can be a privacy concern.

With a local LLM, a desktop app that can be written using Electron, you can have a private and offline AI application that can use an LLM as a agent for a very specific task. For instance, automatically classifying all your photos into categories based on portraits, cities they’re taken in, or any other metadata that models like LLava or Moondream can find.

You could also have your app connect to Messages.app or Photos Library, read the sqlite database, and build a search, insights features, or any other ideas on top of it leveraging AI.

I was very excited when I found out chatd when I realized they bundled not only the client app, but the model and the inference server in a single app. This means you can run the server locally, pick any model that Ollama supports, and have a private and offline AI application. The only downside is that it’s only as powerful as your machine, and that it needs to download GBs of models before doing anything.

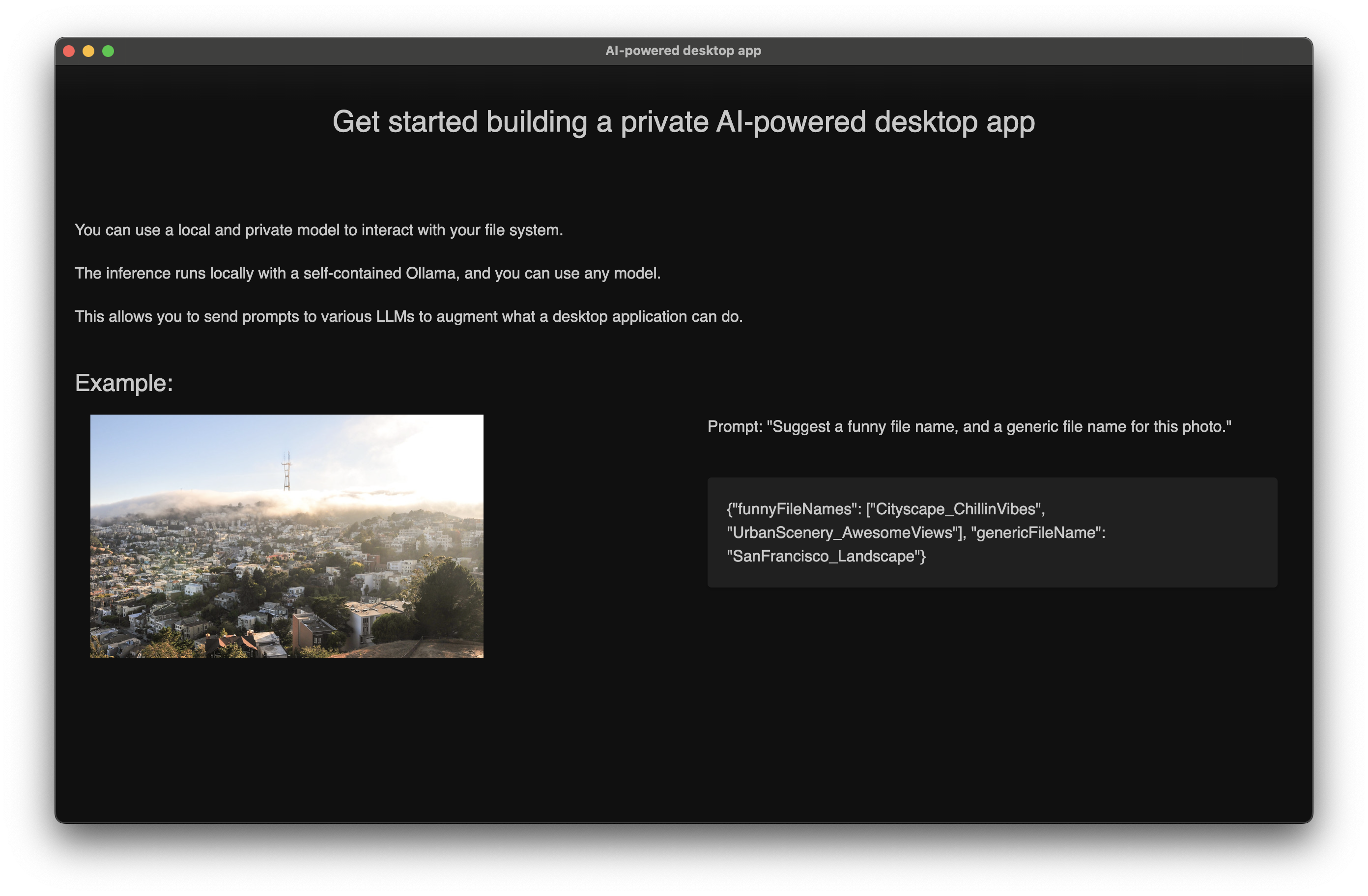

I’m now tinkering with this setup, and will likely experiment building single task-focused AI apps that can run locally. In the meantime, I’ve created a repo from the original chatd codebase as a blank boilerplate of this setup, with the bare minimum to get started. It contains what you need to interact with a model using Electron’s APIs, the bundle logic, and a basic UI.

Enjoy!